Spoken Language Processing Research Lab

SLP Research Lab

The School of Computer Science and Engineering The Hebrew University of JerusalemThe Spoken Language Processing Research Lab (SLP-RL), led by Dr. Yossi Adi at The Hebrew University of Jerusalem, is a dynamic hub for cutting-edge research. Our diverse research interests span spoken language modeling, automatic speech recognition, speech enhancement, speech and audio generation, and machine learning for audio, speech, and language processing.

Our lab collaborates with the global research community to advance speech technology through machine learning and deep learning toolks. Our goal is to create adaptive systems that enrich spoken human communication across various languages.

If you would like to pursue graduate studies with us (Master or PhD), please send your CV, research interests, and example code projects to the following address: yossi.adi@mail.huji.ac.il.

News

- Our research paper GmSLM : Generative Marmoset Spoken Language Modeling got accepted EMNLP (Findings) 2025!

- Two research papers got accepted at ASRU 2025!

- We are co-organizing a challenge at ICASSP 2026 on Low-Resource Audio Codec (LRAC)!

- Our research paper Scaling Analysis of Interleaved Speech-Text Language Models got accepted to COLM 2025!

- Our research paper CAFA: a Controllable Automatic Foley Artist got accepted to ICCV 2025!

- Our lab is taking part in the organization of iSpeech-2025, the second Israeli seminar on Speech & Audio processing using neural nets.

- Two research papers got accepted at INTERSPEECH 2025!

- Our research paper Slamming: Training a Speech Language Model on One GPU in a Day got accepted to the Findings of ACL 2025!

- Four research papers got accepted at ICASSP 2025!

- Four research papers got accepted at ICASSP 2025!

- Two research papers got accepted at ISMIR 2024!

- Five research papers got accepted at Interspeech 2024!

- We are co-organizing a tutorial at Interspeech 2024 on Recent Advances in Speech Language Models

- Our research paper Masked Audio Generation using a Single Non-Autoregressive Transformer was accepted at ICLR 2024!

- Our lab is co-organizing two special sessions at Interspeech 2024 on SpeechLMs and Discrete speech representation for speech processing.

- Two research papers got accepted at AAAI 2024!

- Two research papers got accepted at EMNLP 2023!

- Four research papers got accepted at NeurIPS 2023!

- Two research papers got accepted at Interspeech 2023!

- Our lab is taking part in the organization of iSpeech-2023, the first Israeli seminar on Speech & Audio processing using neural nets.

- Our research paper ReVISE: Self-Supervised Speech Resynthesis with Visual Input for Universal and Generalized Speech Enhancement was accepted at CVPR 2023!

- Five research papers got accepted to ICASSP 2023!

- We started a New PhD program between HUJI and FAIR, Meta AI.

- Our research paper AudioGen: Textually Guided Audio Generation was accepted at ICLR 2023!

- Our research paper On the Importance of Gradient Norm in PAC-Bayesian Bounds was accepted at NeurIPS 2022!

- Our research paper RemixIT: Continual Self-Training of Speech Enhancement Models via Bootstrapped Remixing was accepted at IEEE JSTSP.

- Five research papers got accepted to Interspeech 2022!

Members

Ph.D. Students

(co-advising with Shmuel Peleg)

(co-advising with Roy Schwartz)

Alumni

- M.Sc. Yuval Ringel (Microsoft)

- M.Sc. Talia Sternberg

- M.Sc. Mickey Finkelson, (Lightricks)

- M.Sc. Dor Tenenboim, (Meta)

- M.Sc. Avishai Elmakies, (IBM)

- M.Sc. Yair Shemer, (MobileEye)

- M.Sc. Nadav Har-Tuv, (MobileEye)

- M.Sc. Shoval Messica, (Mentee Robotics)

- M.Sc. Ella Zeldes

- M.Sc. Amit Roth

- M.Sc. Roy Sheffer, (MobileEye)

- M.Sc. Amitay Sicherman, (Google)

- M.Sc. Avi Rosen (Snap Inc.)

- M.Sc. Moshe Mandel

Selected Publications

Here is a list of selected publications from our group. For the full list of publications see my Google Scholar.

2025

- Talia Sterngerg, Mickey London, David Omer, Yossi Adi. GmSLM : Generative Marmoset Spoken Language Modeling. Findings of Empirical Methods in Natural Language Processing (EMNLP), 2025, [PDF].

- Arnon Turetzky, Nimrod Shabtay, Slava Shechtman, David Haws, Hagai Aronowitz, Ron Hoory, Yossi Adi, Avihu Dekel. Speech Synthesis From Continuous Features Using Per-Token Latent Diffusion. IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), 2025, [PDF].

- Robin San Roman, Manel Khentout, Tu Anh Nguyen, Yossi Adi, Emmanuel Dupoux. Benchmarking Fast Domain Adaptation for Unsupervised Speech Units. IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), 2025, [PDF].

- Gallil Maimon, Michael Hassid, Amit Roth, Yossi Adi. Scaling Analysis of Interleaved Speech-Text Language Models. The 2nd Conference on Language Modeling (COLM), 2025, [PDF, Project page].

- Roi Benita*, Michael Finkelson, Tavi Halperin, Gleb Sterkin, Yossi Adi. CAFA: a Controllable Automatic Foley Artist. The IEEE/CVF International Conference on Computer Vision (ICCV), 2025, [PDF, Project page].

- Iddo Yosha, Dorin Shteyman, Yossi Adi. WHISTRESS: Enriching Transcriptions with Sentence Stress Detection. The 26th Annual Conference of the International Speech Communication Association (Interspeech), 2025, [PDF, Project page].

- Nadav Har-Tuv, Or Tal, Yossi Adi. PAST: Phonetic-Acoustic Speech Tokenizer. The 26th Annual Conference of the International Speech Communication Association (Interspeech), 2025, [PDF, Project page].

- Gallil Maimon*, Avishai Elmakies*, Yossi Adi. Slamming: Training a Speech Language Model on One GPU in a Day. Findings of The 63rd Annual Meeting of the Association for Computational Linguistics (ACL), 2025, [PDF, Project page].

- Gallil Maimon*, Amit Roth*, Yossi Adi. A Suite for Acoustic Language Model Evaluation. The 50th IEEE International Conference in Acoustic, Speech and Signal Processing (ICASSP), 2024, [PDF, Code & Data].

- Ella Zeldes, Or Tal, Yossi Adi. Enhancing TTS Stability in Hebrew using Discrete Semantic Units. The 50th IEEE International Conference in Acoustic, Speech and Signal Processing (ICASSP), 2024, [PDF, Code & Demo].

- Robin San Roman, Pierre Fernandez, Antoine Deleforge, Yossi Adi, Romain Serizel. Latent Watermarking of Audio Generative Models. The 50th IEEE International Conference in Acoustic, Speech and Signal Processing (ICASSP), 2024, [PDF].

- Simon Rouard*, Robin San Roman*, Yossi Adi, Axel Roebel. MusicGen-Stem: Multi-stem music generation and edition through autoregressive modeling. The 50th IEEE International Conference in Acoustic, Speech and Signal Processing (ICASSP), 2024, [PDF].

2024

- Or Tal*, Alon Ziv*, Itai Gat, Felix Kreuk, Yossi Adi. Joint Audio and Symbolic Conditioning for Temporally Controlled Text-to-Music Generation. The 25th International Society for Music Information Retrieval (ISMIR) Conference, (2024), [PDF].

- Simon Rouard, Jade Copet, Yossi Adi, Axel Roebel, Alexandre Defossez. Audio Conditioning for Music Generation via Discrete Bottleneck Features. The 25th International Society for Music Information Retrieval (ISMIR) Conference, (2024), [PDF].

- Shoval Messica, Yossi Adi. NAST: Noise Aware Speech Tokenization for Speech Language Models. The 25th Annual Conference of the International Speech Communication Association (Interspeech), 2024, [PDF].

- Xuankai Chang, Jiatong Shi, Shinji Watanabe, Yossi Adi, Xie Chen, Qin Jin. The Interspeech 2024 Challenge on Speech Processing Using Discrete Units. The 25th Annual Conference of the International Speech Communication Association (Interspeech), 2024, [PDF].

- Shiran Aziz, Yossi Adi, Shmuel Peleg. Audio Enhancement from Multiple Crowdsourced Recordings: A Simple and Effective Baseline. The 25th Annual Conference of the International Speech Communication Association (Interspeech), 2024, [PDF].

- Amit Roth, Arnon Turetzky, Yossi Adi. A Language Modeling Approach to Diacritic-Free Hebrew TTS. The 25th Annual Conference of the International Speech Communication Association (Interspeech), 2024, [PDF].

- Arnon Turetzky, Or Tal, Yael Segal-Feldman, Yehoshua Dissen, Ella Zeldes, Amit Roth, Eyal Cohen, Yosi Shrem, Bronya R. Chernyak, Olga Seleznova, Joseph Keshet, Yossi Adi. HebDB: a Weakly Supervised Dataset for Hebrew Speech Processing. The 25th Annual Conference of the International Speech Communication Association (Interspeech), 2024, [PDF].

- Jean-Marie Lemercier*, Simon Rouard*, Jade Copet, Yossi Adi, Alexandre Defossez. An Independence-promoting Loss for Music Generation with Language Models. The 41st International Conference on Machine Learning (ICML), 2024, [PDF].

- Alon Ziv, Itai Gat, Gael Le Lan, Tal Remez, Felix Kreuk, Alexandre Defossez, Jade Copet, Gabriel Synnaeve, Yossi Adi. Masked Audio Generation using a Single Non-Autoregressive Transformer. International Conference on Learning Representations (ICLR), 2024, [PDF, Code & Samples].

- Guy Yariv, Itai Gat, Sagie Benaim, Lior Wolf, Idan Schwartz*, Yossi Adi*. Diverse and Aligned Audio-to-Video Generation via Text-to-Video Model Adaptation. The Thirty-Eighth AAAI Conference on Artificial Intelligence (AAAI), 2024, [PDF, Code & Samples].

2023

- Gallil Maimon, Yossi Adi. Speaking Style Conversion With Discrete Self-Supervised Units. Findings of Empirical Methods in Natural Language Processing (EMNLP), 2023, [PDF, Samples & Code].

- Robin Algayres, Yossi Adi, Tu Anh Nguyen, Jade Copet, Gabriel Synnaeve, Benoit Sagot, Emmanuel Dupoux. Generative Spoken Language Model Based on Continuous Word-sized Audio Tokens. Conference on Empirical Methods in Natural Language Processing (EMNLP), 2023, [PDF].

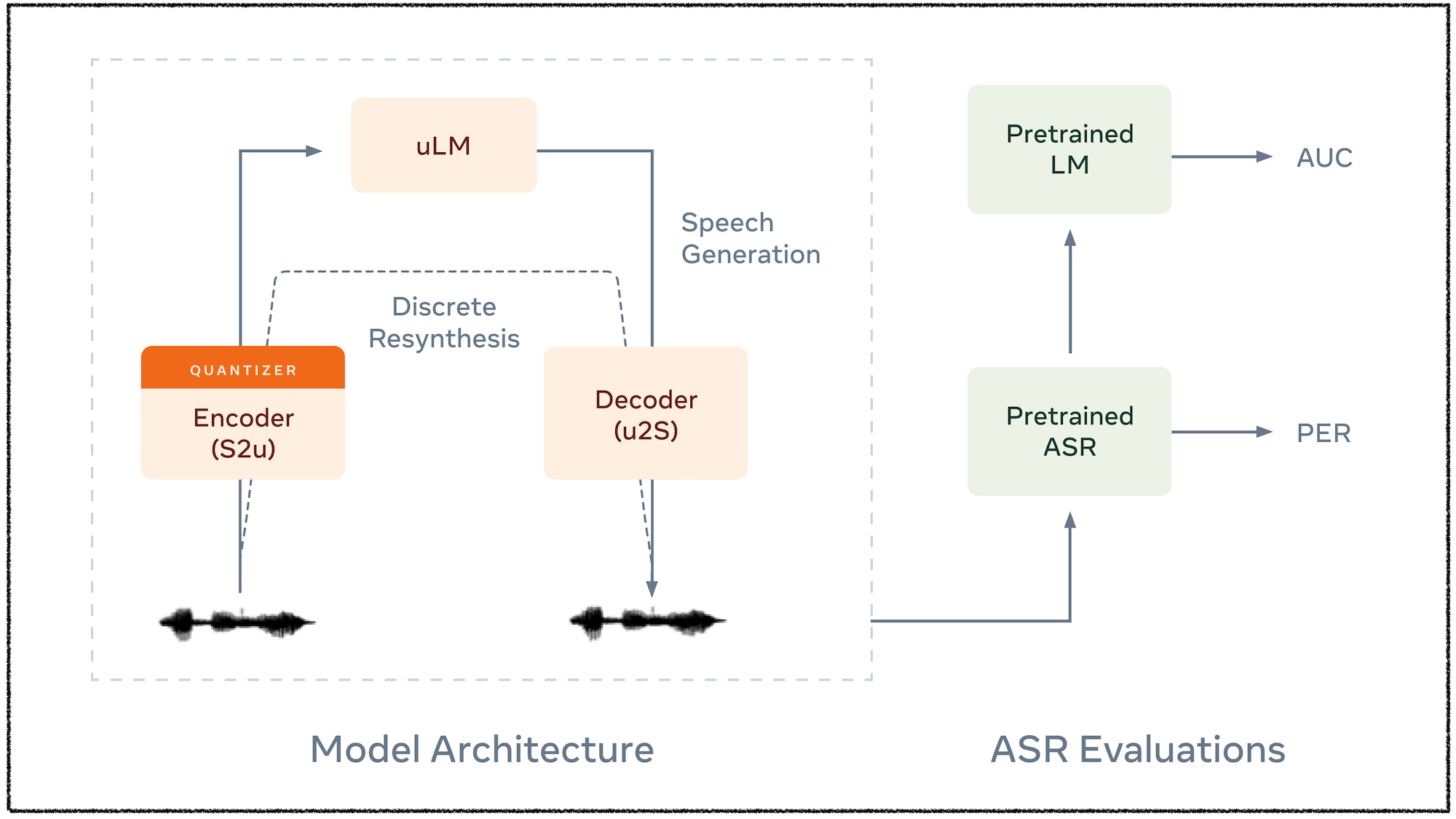

- Michael Hassid, Tal Remez, Tu Anh Nguyen, Itai Gat, Alexis Conneau, Felix Kreuk, Jade Copet, Alexandre Defossez, Gabriel Synnaeve, Emmanuel Dupoux, Roy Schwartz, Yossi Adi. Textually Pretrained Speech Language Models. The 37th Annual Conference on Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 2023, [PDF, Samples].

- Robin San Roman, Yossi Adi, Antoine Deleforge, Romain Serizel, Gabriel Synnaeve, Alexandre Défossez. From Discrete Tokens to High-Fidelity Audio Using Multi-Band Diffusion. The 37th Annual Conference on Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 2023, [PDF, Code, Samples].

- Jade Copet, Felix Kreuk, Itai Gat, Tal Remez, David Kant, Gabriel Synnaeve, Yossi Adi, Alexandre Défossez. Simple and Controllable Music Generation. The 37th Annual Conference on Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 2023, [PDF, Code, Samples, Demo].

- Guy Yariv, Itai Gat, Lior Wolf, Yossi Adi*, Idan Schwartz*. Adaptation of Text-Conditioned Diffusion Models for Audio-to-Image Generation. The 24th Annual Conference of the International Speech Communication Association (Interspeech), 2023, [PDF, Code, Demo, Samples].

- Tu Anh Nguyen, Wei-Ning Hsu, Antony d'Avirro, Bowen Shi, Itai Gat, Maryam Fazel-Zarandi, Tal Remez, Jade Copet, Gabriel Synnaeve, Michael Hassid, Felix Kreuk, Yossi Adi*, Emmanuel Dupoux*. Expresso: A Benchmark and Analysis of Discrete Expressive Speech Resynthesis. The 24th Annual Conference of the International Speech Communication Association (Interspeech), 2023, [PDF, Dataset].

- Wei-Ning Hsu, Tal Remez, Bowen Shi, Jacob Donley, Yossi Adi. ReVISE: Self-Supervised Speech Resynthesis with Visual Input for Universal and Generalized Speech Enhancement. The Conference on Computer Vision and Pattern Recognition (CVPR), 2023, [PDF, Samples].

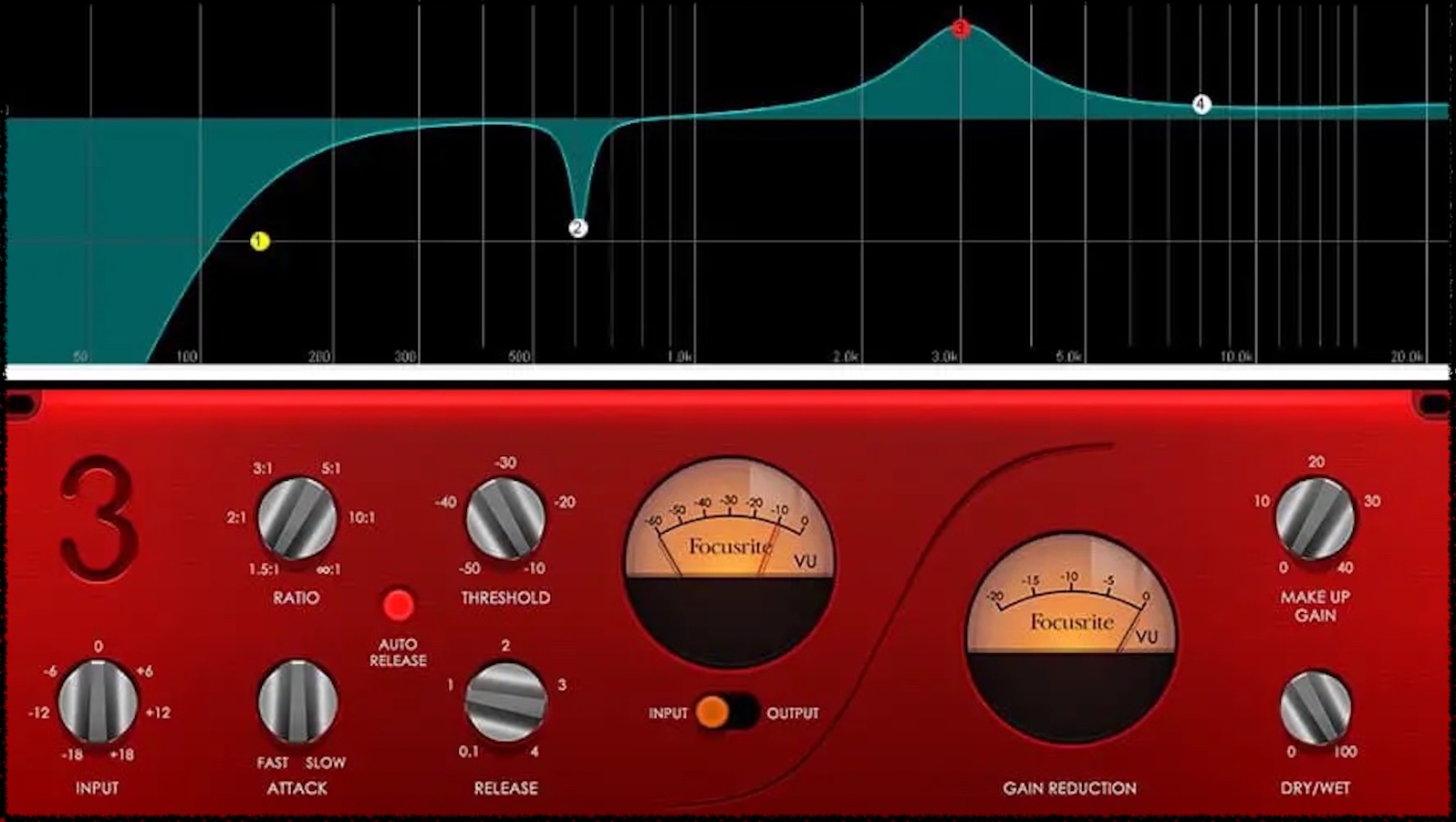

- Moshe Mandel, Or Tal, Yossi Adi. AERO: Audio Super Resolution in the Spectral Domain. The 48th IEEE International Conference in Acoustic, Speech and Signal Processing (ICASSP), 2023, [PDF, Code, Samples].

- Roy Sheffer, Yossi Adi. I Hear Your True Colors: Image Guided Audio Generation. The 48th IEEE International Conference in Acoustic, Speech and Signal Processing (ICASSP), 2023, [PDF, Code, Samples].

- Amitay Sicherman, Yossi Adi. Analyzing Discrete Self Supervised Speech Representation For Spoken Language Modeling. The 48th IEEE International Conference in Acoustic, Speech and Signal Processing (ICASSP), 2023, [PDF, Code, Tool].

- Felix Kreuk, Gabriel Synnaeve, Adam Polyak, Uriel Singer, Alexandre Defossez, Jade Copet, Devi Parikh, Yaniv Taigman, Yossi Adi. AudioGen: Textually Guided Audio Generation. International Conference on Learning Representations (ICLR), 2023, [PDF, Samples].

2021-2022

- Arnon Turetzky, Tzvi Michelson, Yossi Adi, Shmuel Peleg. Deep Audio Waveform Prior. The 23rd Annual Conference of the International Speech Communication Association (Interspeech), 2022, [PDF].

- Or Tal, Moshe Mandel, Felix Kreuk, Yossi Adi. A Systematic Comparison of Phonetic Aware Techniques for Speech Enhancement. The 23rd Annual Conference of the International Speech Communication Association (Interspeech), 2022, [PDF, Code].

- Efthymios Tzinis, Yossi Adi, Vamsi K. Ithapu, Buye Xu, Anurag Kumar. Continual Self-Training with Bootstrapped Remixing For Speech Enhancement. The 47th IEEE International Conference in Acoustic, Speech and Signal Processing (ICASSP), 2022, [PDF].

- Shahaf Bassan, Yossi Adi, Jeffrey S. Rosenschein. Unsupervised Symbolic Music Segmentation using Ensemble Temporal Prediction Errors. The 23rd Annual Conference of the International Speech Communication Association (Interspeech), 2022, [PDF].

- Efthymios Tzinis, Yossi Adi, Vamsi Krishna Ithapu, Buye Xu, Paris Smaragdis, Anurag Kumar. RemixIT: Continual Self-Training of Speech Enhancement Models via Bootstrapped Remixing. IEEE Journal of Selected Topics in Signal Processing, 2022, [PDF].

- Shahar Segal, Yossi Adi, Benny Pinkas, Carsten Baum, Chaya Ganesh, Joseph Keshet. Fairness in the Eyes of the Data: Certifying Machine-Learning Models. The Forth AAAI/ACM Conference on Artificial Intelligence, Ethics, and Society (AIES), 2021, [PDF].

Open-source

At our laboratory, we are dedicated to open source and open research principles. All our source code and pre-trained models are publicly available, and we actively gather diverse spoken datasets in multiple languages for training and evaluating speech technologies. With embracing openness, our aim is to drive innovation and collaboration in the field of speech technology globally.

Code & Datasets

All source-code, pre-trained models and datasets are available under the SLP Research Lab GitHub page and HuggingFace page.