Memory Access Patterns

Introduction

GPU programming is a challenging task. In order to make use of the multitude of cores and efficient memory system, developers must have a deep understanding of the underlying architecture an application is programmed for. What's more, architectures change over time, and even two GPU models within the same architecture may require different settings for applications to be maximally efficient. This problem is exacerbated when programming to multiple GPUs on the same node.

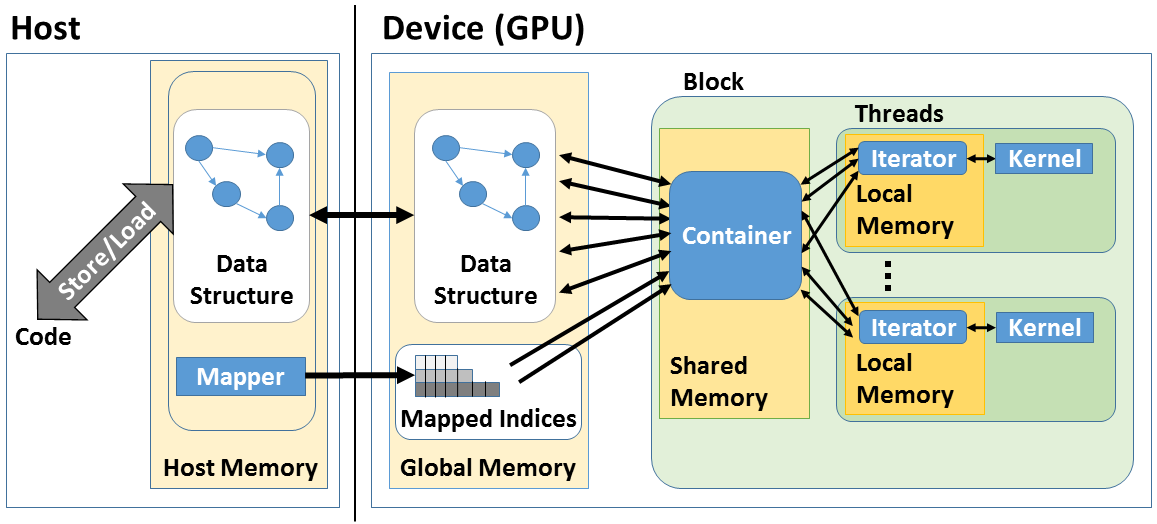

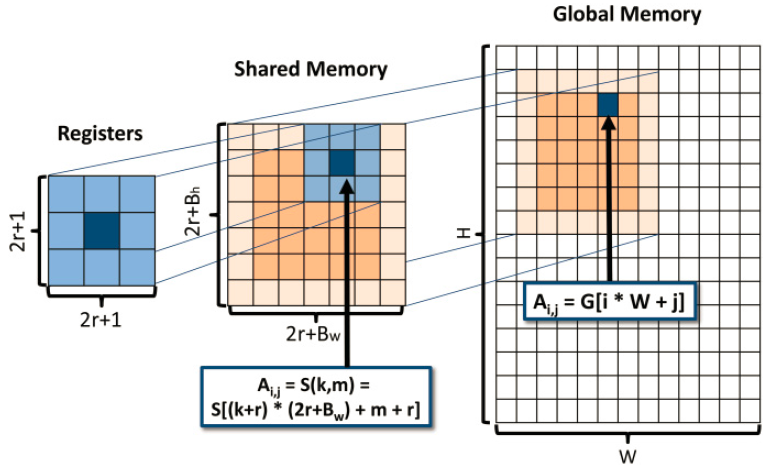

Most of the time, the source of GPU and multi-GPU under-utilization is suboptimal memory access/transfer, either within a GPU, between GPUs, or between the GPUs and the main memory. Programmers often have to deal with different indexing systems (e.g., host memory, GPU global memory, shared memory, registers, see image below), and maintain a lengthy, often unintelligible code over time.

In general, the learning curve for optimized GPU programming is steep, decreasing programmer productivity and increasing debugging time. However, most of the GPU applications usually boil down to the same memory access patterns, ultimately causing programmers create similar mechanisms independently.

Patterns

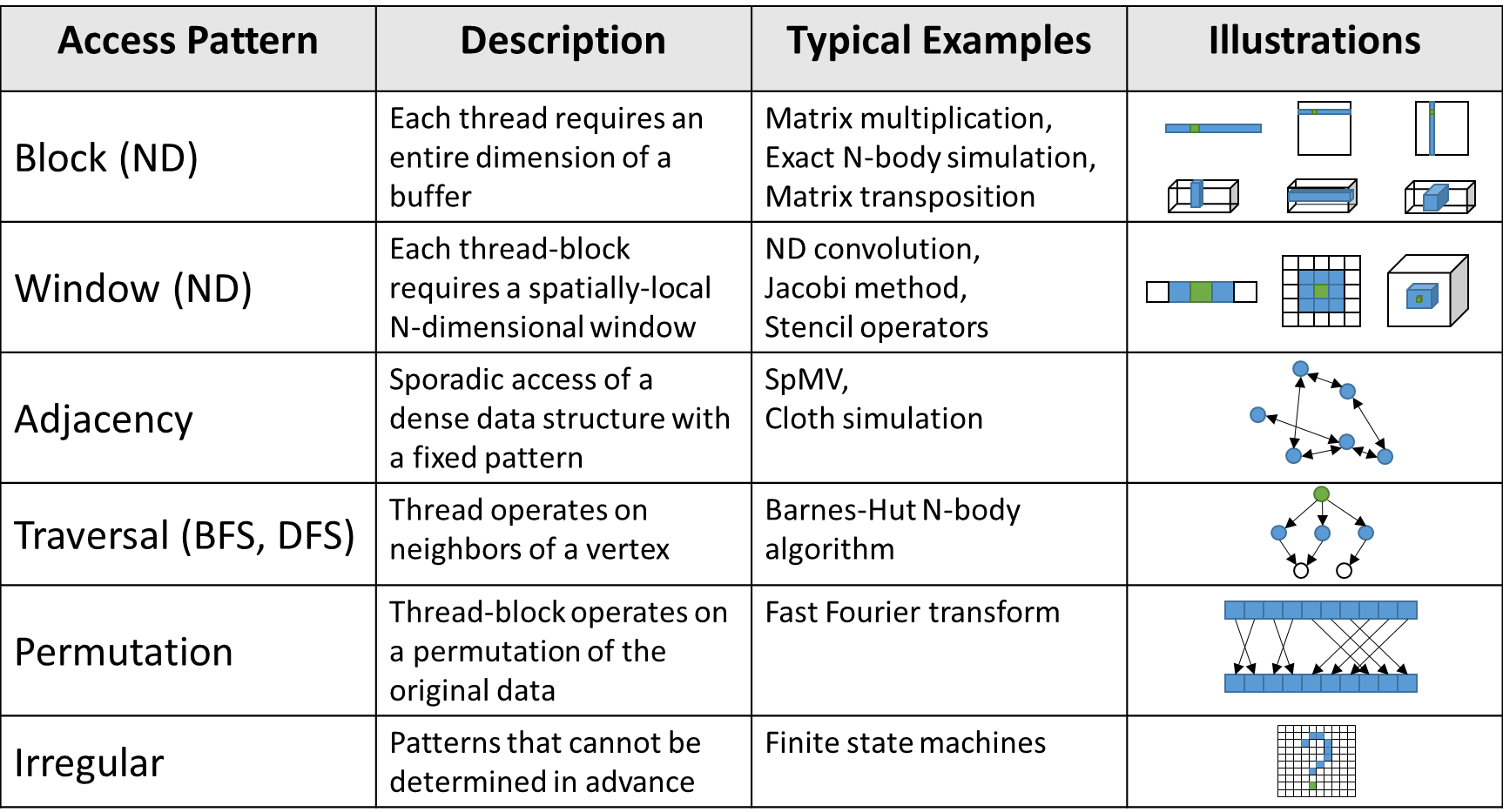

Following an extensive research on parallel algorithms, we compiled a list of memory access patterns that cover the vast majority of GPU and multi-GPU applications. The input patterns are as follows:

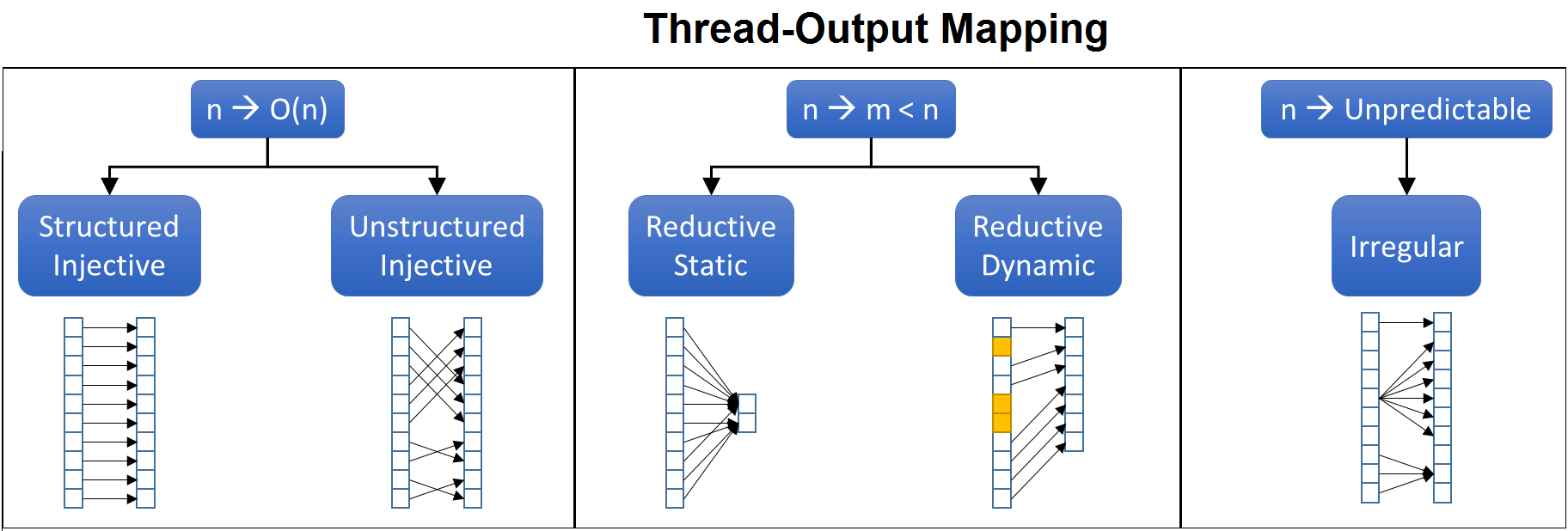

Output memory access patterns were similarly constructed, using all possibilities to map threads to outputs:

The above patterns determines how the work of a kernel is segmented:

- Between thread-blocks and threads of a single GPU

- Between different GPUs on the same node, creating memory interdependencies

Workflow

Based on the above patterns, the Memory Access Pattern Specification (MAPS) framework provides an easy-to-use host- and device-level APIs to create highly optimized GPU applications. The library is standard C++11 and header-based, allowing any application to be augmented using a single "#include".

To work with MAPS, programmers can either use the device-level API directly, or create a Scheduler, which automatically partition tasks to all GPUs (or a specified subset). In the application, each GPU data structure is defined as an N-dimensional Datum handle object, which can be bound to existing host (main) memory using the Bind method.

Work is defined by Tasks, which are tuples that contain:

- Parameters, each one defined as a Datum handle and its respective pattern

- Constants, which are fixed-sized parameters that should be available to all threads

- Work dimensions, corresponding to the grid and thread-block GPU dimensions

- Kernel code, passed as a function pointer

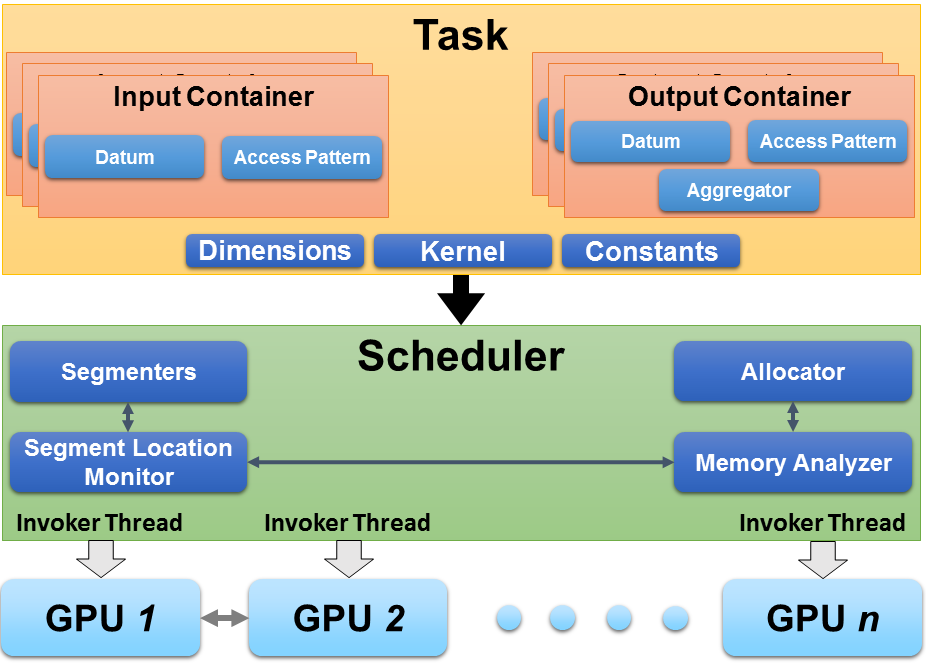

To run such tasks, they must all first be analyzed with respect to their patterns in the Scheduler (once per task type). This process aids the framework in determining the optimal allocation size for each buffer on each GPU. After all task types have been analyzed, they can simply be executed using the Invoke method of the Scheduler. The Invoke method ensures that the correct data is copied to each GPU (without redundancy), handling boundary exchanges and host-device transfers automatically. To collect the data from the GPUs back to the main memory, the Gather method should be called. The figure below depicts the host-level infrastructure of MAPS.

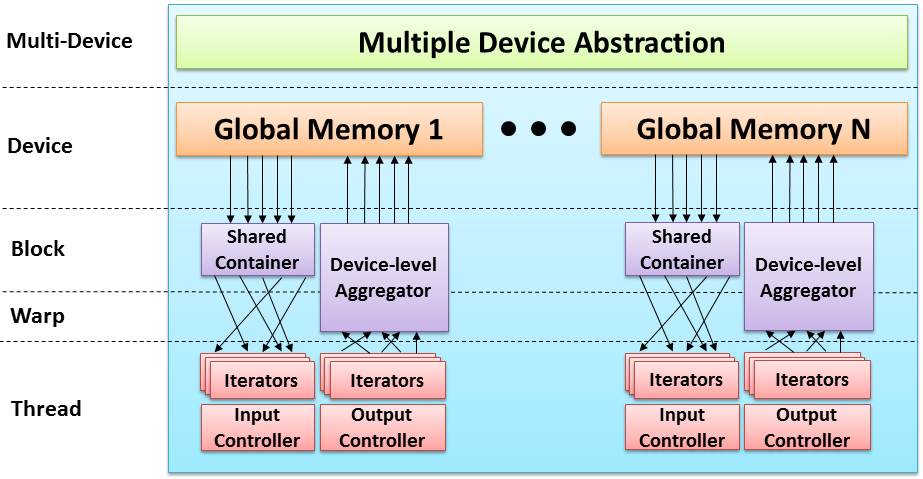

On the GPUs, the parameters are represented as Container data structures, which are initialized using a single line (or manually, using the Advanced API). From these containers, each thread creates an iterator that traverses the required data using a FOREACH loop. Internally, the framework decides where to store the information (shared memory, registers) according to the patterns for maximal efficiency.

In some patterns, it is necessary to traverse a large amount of information. This is performed in chunked access, represented by a do-while loop on the available chunks. Methods that efficiently load (and sometimes prefetch) the next chunks are available to the programmer, provided along with manual methods in the Advanced API. For an example, see the Matrix Multiplication example in the Showcase.

To support Instruction-Level Parallelism (ILP) optimizations, MAPS allows a single thread to process multiple elements. This is performed via the output iterators, which may contain multiple elements. Inside an output FOREACH loop, iterators for input containers can be created using either the align container method or the MAPS_FOREACH_ALIGNED loop macro. This ensures that each output element processes its respective inputs, allocating GPU registers efficiently.

To store the outputs to the global memory, the commit output container method has to be called. This allows the system to output memory in an ordered manner, enabling further optimizations, such as warp-level aggregation. The device-level infrastructure is illustrated in the figure below:

The entire process is depicted in the following figure: