|

Abstract

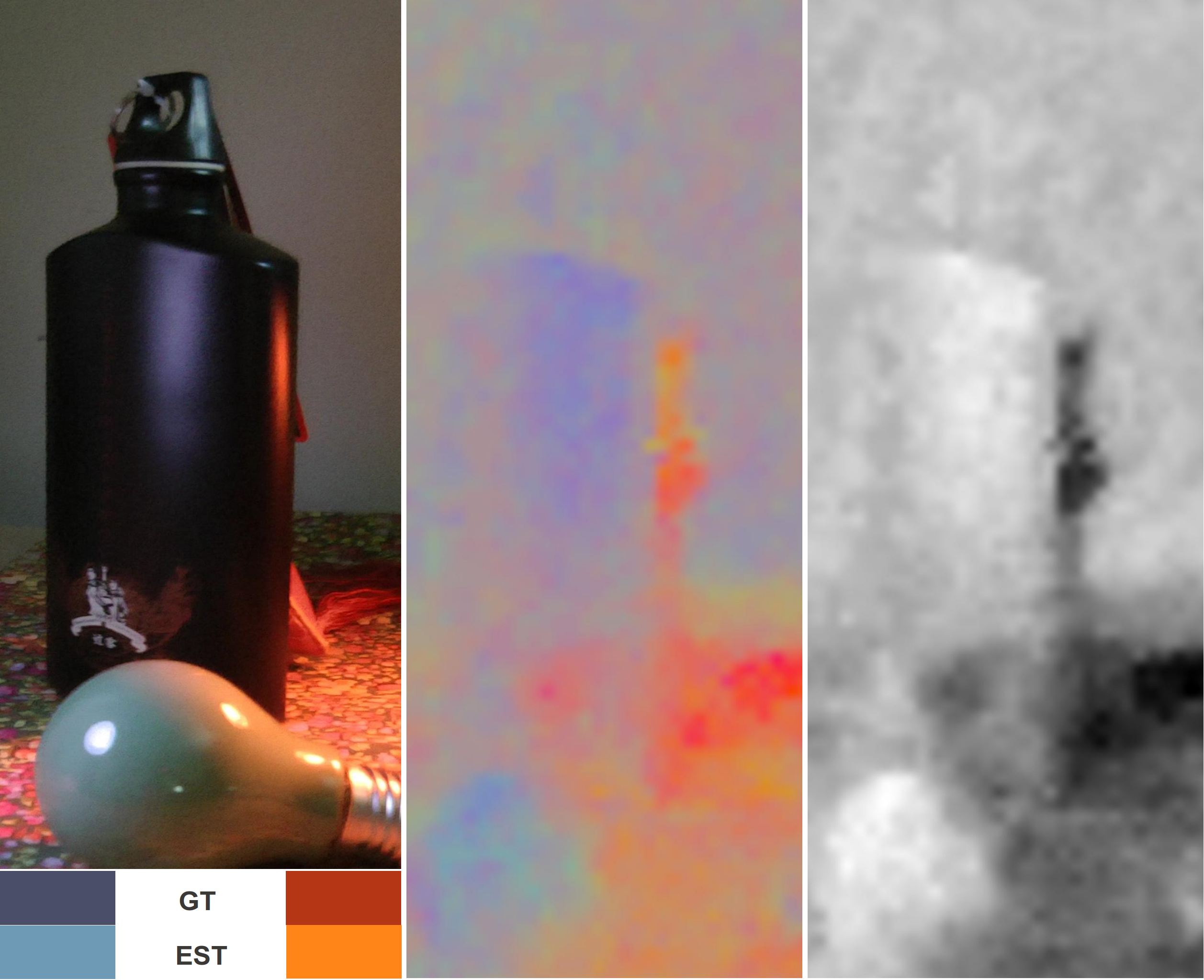

We estimate illuminant chromaticity from temporal sequences, for scenes illuminated by either one or two dominant illuminants. While there are many methods for illuminant estimation from a single image, few works so far have focused on videos, and even fewer on multiple light sources. Our aim is to leverage information provided by the temporal acquisition, where either the objects or the camera or the light source are/is in motion in order to estimate illuminant color without the need for user interaction or using strong assumptions and heuristics.

We introduce a simple physically-based formulation based on the assumption that the incident light chromaticity is constant over a short space-time domain. We show that a deterministic approach is not sufficient for accurate and robust estimation: however, a probabilistic formulation makes it possible to implicitly integrate away hidden factors that have been ignored by the physical model.

Experimental results are reported on a dataset of natural video sequences and on the GrayBall benchmark, indicating that we compare favorably with the state-of-the-art.

@InProceedings{vdm_iccv2013,

author = {V. Prinet and D. Lischinski and M. Werman},

title = {Illuminant Chromaticity from Image Sequences},

journal = {International Conference on Computer Vision (ICCV)},

year = {2013},

month = {December}}

Erratum : One of the two-illuminant sequences that we used for experiment, the 'shadow' sequence, covers some area of the gray card used as ground truth. It is consequently recommanded to *not* use this sequence for evaluation and comparison.

|

|

|